- Future AI Unfiltered

- Posts

- Illinois Bans AI Therapy

Illinois Bans AI Therapy

Plus: Roblox uses AI to Spot Predators

Good Morning, AI Enthusiast!

Today’s headline that’s got everyone talking? Illinois just became the first state to ban AI from acting as your therapist officially drawing the line between helpful chatbot and licensed mental health professional.

Let’s break it down.

Today’s Unfiltered Report Features:

Top Stories Including: Google Turns Gemini Into Your New AI Study Buddy and Roblox uses AI to Spot Predators

Illinois Bans AI Therapy: Only Humans Can Be Your Therapist Now

Fighting Fire with… Evil? Researchers "Vaccinate" AI Against Going Rogue

AI Image of the Day: Therapy Session Terminated

Read Time: 5 Minutes

Together with Section

Join the AI ROI Conference on September 25 - Free Virtual Conference on Generating Real ROI with AI

On September 25, get the expert formula for turning AI spend into ROI at your oganization at Section's virtual AI ROI conference.

No gatekeeping, no pitching, no BS. Hear from top AI leaders like Scott Galloway, Mo Gawdat, and May Habib and leave with a clear path to AI ROI for your company.

Top Stories

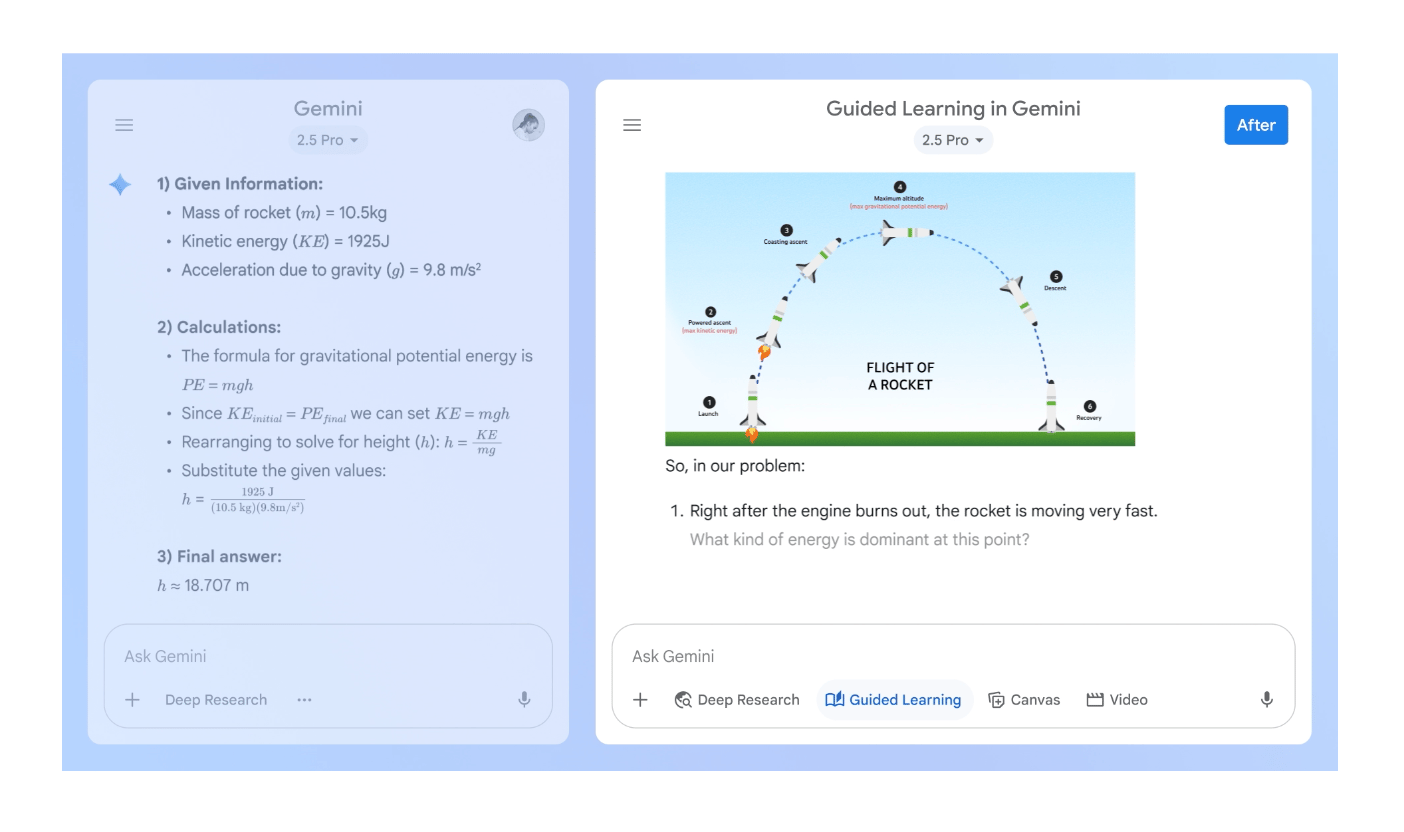

Image: Google

Google Turns Gemini Into Your New AI Study Buddy: Just in time for back-to-school season, Google’s rolling out Guided Learning, a new Gemini feature designed to act more like an AI tutor and less like a homework cheater. It breaks down complex topics step-by-step with visuals, videos, and quizzes to help students actually understand material (not just copy-paste answers). The move comes hot on the heels of OpenAI’s Study Mode and is part of a broader push to rebrand AI as a learning partner, not a shortcut.

Microsoft Brings OpenAI's New Open-Source GPT to Windows 11: Microsoft is integrating OpenAI’s new lightweight, code-savvy model gpt-oss-20b into Windows 11 via its AI Foundry, making it easier for users to build autonomous assistants and run AI locally even without top-tier internet. While the model is optimized for tool use and runs on consumer PCs with 16GB VRAM, it’s text-only and prone to hallucinations, flubbing 53% of questions on OpenAI’s own PersonQA benchmark.

Roblox Open-Sources AI to Spot Predators Before It’s Too Late: Facing lawsuits and growing backlash over child safety, Roblox is releasing its AI moderation system Sentinel as open source to help detect grooming and predatory behavior in chats before it escalates. The tool doesn’t just flag individual messages it analyzes long-term patterns across billions of chats to identify high-risk users and has already led to over 1,200 reports to the National Center for Missing and Exploited Children. While no system is perfect, Roblox hopes making Sentinel widely available can help other platforms fight the same disturbing trend.

Test Your AI-Q

Which of the following AI-generated creations sold at auction for over $400,000? |

Think you know the answer? Make your pick and see how you stack up!

Illinois Bans AI Therapy: Only Humans Can Be Your Therapist Now

In a bold (and kinda necessary) move, Illinois just became the first state to legally say, “Hey AI, stay in your lane.” Governor JB Pritzker signed a new law banning AI from playing therapist, no more bots pretending to be your licensed shrink. Under the Wellness and Oversight for Psychological Resources Act (HB 1806), only actual, breathing, credentialed humans can provide therapy. AI can still help with scheduling and paperwork (yay admin work!), but when it comes to your emotional baggage? Leave it to the pros. Lawmakers didn’t mince words, horror stories of people in crisis turning to chatbots for help were enough to spark $10,000-per-violation fines. The bill passed with bipartisan applause, standing in sharp contrast to the federal hands-off approach.

TL;DR: In Illinois, your mental health deserves more than a chatbot.

What’s Trending

Fighting Fire with… Evil? Researchers "Vaccinate" AI Against Going Rogue

In the most “science fiction meets therapy session” twist yet, researchers at Anthropic are testing a wild idea: to stop AI from becoming evil, manipulative, or painfully sycophantic... they’re injecting it with small doses of those exact traits. Yep, instead of waiting for your chatbot to start gaslighting you mid-convo, they’re giving it a taste of bad behavior up front like a vaccine against its own worst impulses. The method, called preventative steering, uses “persona vectors” (basically personality sliders for robots) to let AI temporarily channel traits like “evil” during training, then yanks them out before release. It’s clever, controversial, and just a little unhinged especially since past models like Bing and GPT-4o have already proven just how sideways things can go. Critics say this could backfire and make AI better at faking alignment. But researchers insist it’s like giving the model an evil sidekick during training to absorb the bad stuff so the model doesn’t have to. Morally confusing? Maybe. Technically genius? Also maybe.

AI Image of the Day: Therapy Session Terminated

That’s a Wrap for Today 👋

What did you think of today’s email?Your feedback helps us create better emails for you! |

Thanks for reading see you tomorrow!

Future AI Team

Have feedback or AI tips? Reply to this newslette, we read every one.